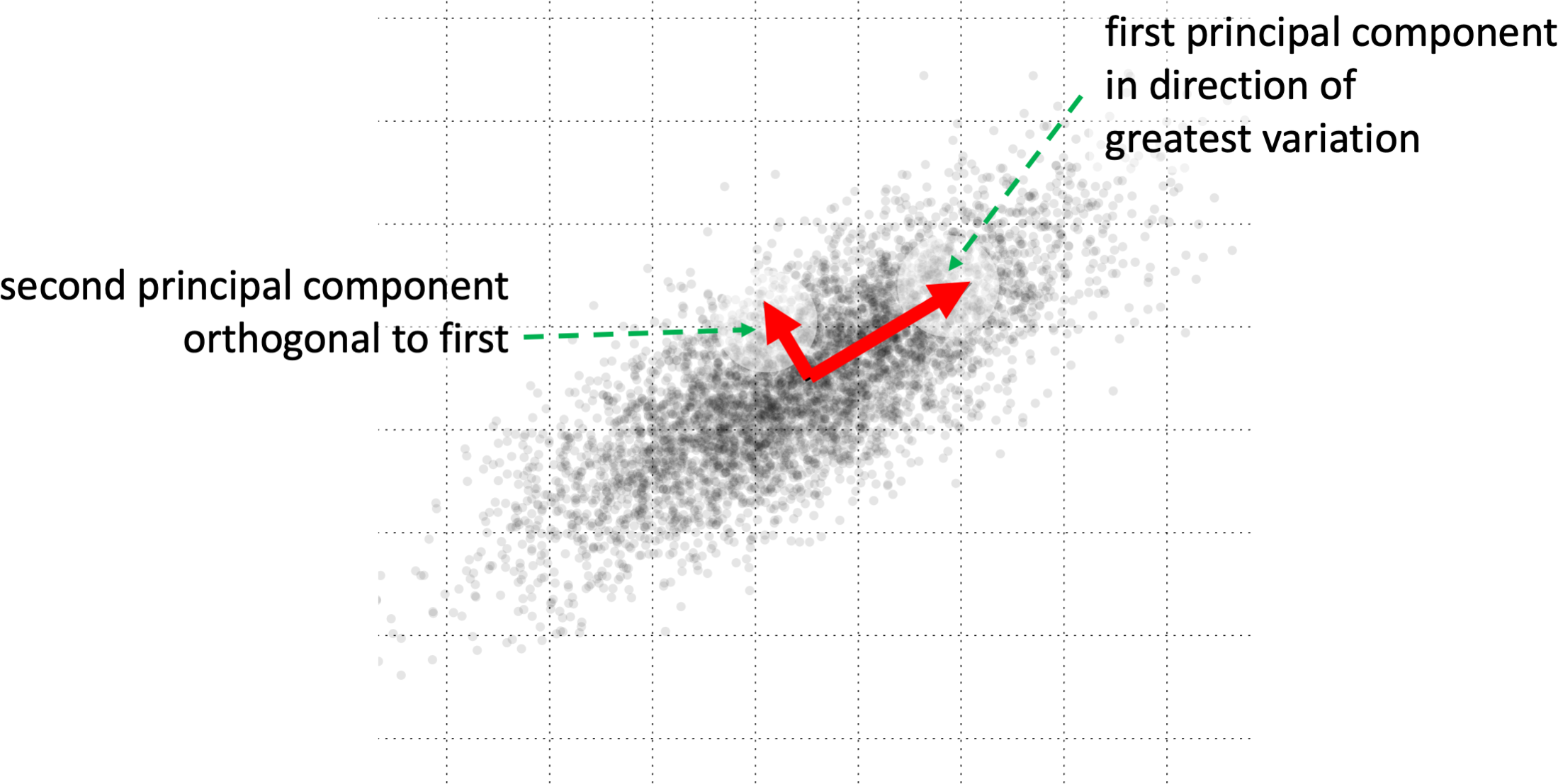

A latent space is a multi-dimensional space that encodes some critical aspects of data. Typically a latent space has lower dimensionality than the original data, but is not simply a subset of the features or attributes of the original data representation. Statistical methods such as principal components and multidimensional scaling create lower dimensional representations. An inner layer of a neural network, particularly at a pinch point, is also often used as a latent space. Latent spaces may be used for dimensional reduction as a preliminary stage before other forms of processing. Alternatively the structure of the latent space may be used directly, for example the way word2vec encodes parallel similarities such as cat-kitten dog-puppy.

Used in Chap. 13: page 202; Chap. 21: page 340

Principal components for 2D data